There’s no question that law firms are investing more deliberately in their technology strategies. With new tools emerging almost weekly, adopting and adapting has become essential to staying competitive.

As part of this shift, pilots – trial runs of legal AI tools within smaller teams – have become commonplace. The goal: evaluate performance, usability, and fit before committing firm-wide.

But many law firms fall into the trap of managing multiple AI pilots with no clear path from experimentation to meaningful adoption. The result? Fatigue, fragmentation, and a missed opportunity to extract real value.

A pilot is simply a starting point. What distinguishes forward-thinking firms is how they assess tools beyond the pilot, making measured, strategic decisions grounded in legal expertise and operational fit.

In this piece, we offer a practical lens for evaluating legal AI tools beyond the pilot.

Pilots Aren’t Enough

Pilots are certainly helpful – but they’re not definitive. By design, they take place under controlled conditions: limited users, defined parameters, and often with direct vendor involvement. That can mask real-world complexity.

What a pilot won’t tell you is how a tool performs under the pressure of live client work. Whether it integrates smoothly across matters and teams. Or how it behaves when legal nuance, edge cases, or firm-specific practices come into play.

Without a clear strategy for evaluation, you risk mistaking activity for progress. Running five pilots doesn’t mean five steps forward. It often means five different data points that are hard to reconcile, draining time and attention without yielding a decision.

Pilots should inform, not replace, rigorous assessment. And that assessment requires a broader view: not just what the tool can do, but what it would take to operationalize it across your practice.

How to Really Evaluate Legal AI

To assess AI tools beyond a pilot program, ask yourself these questions in these key areas:

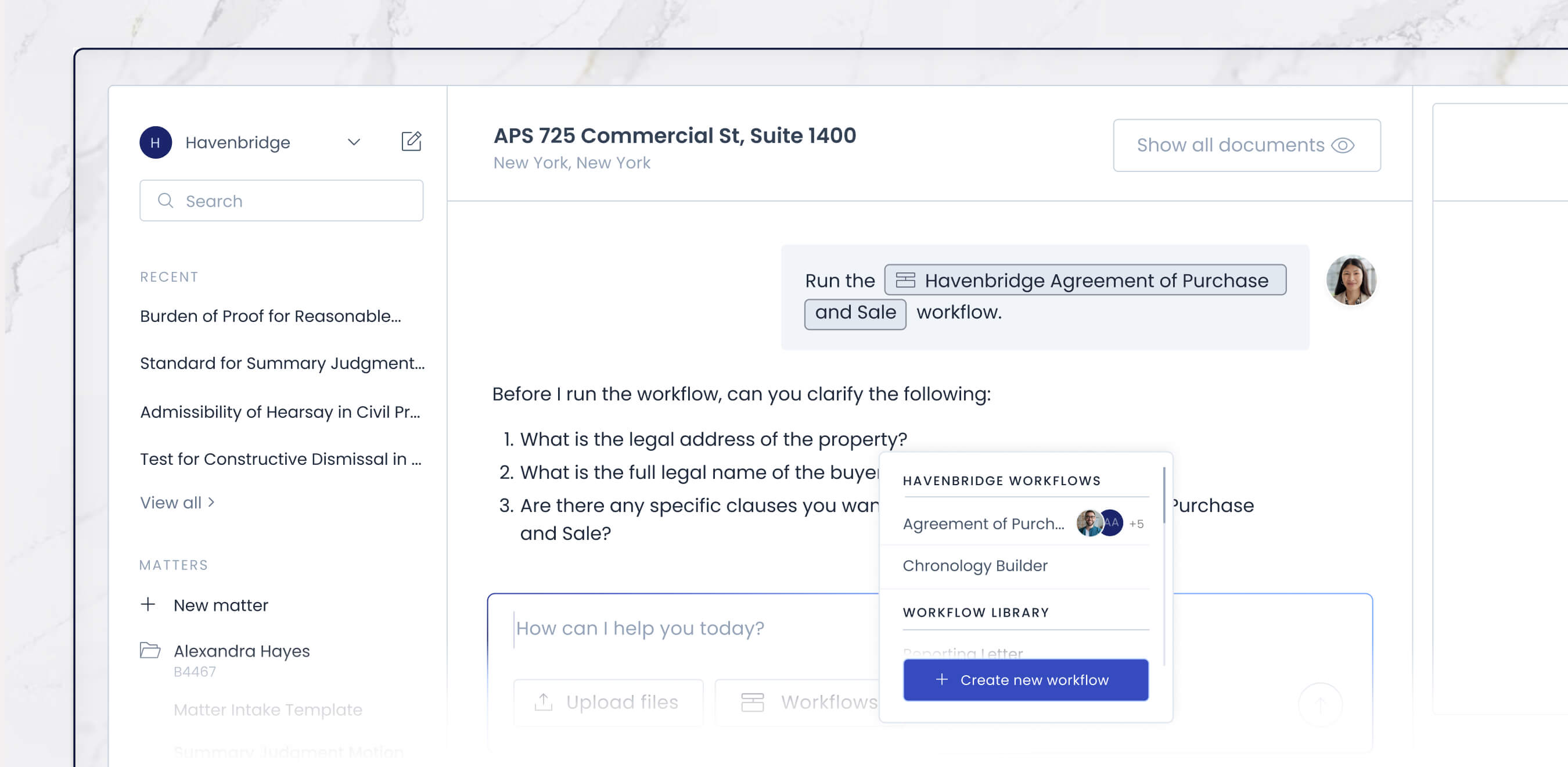

Alignment with Workflows

- Is the AI meaningfully integrated into the way your teams actually work?

- Does it reflect the complexity of real client matters?

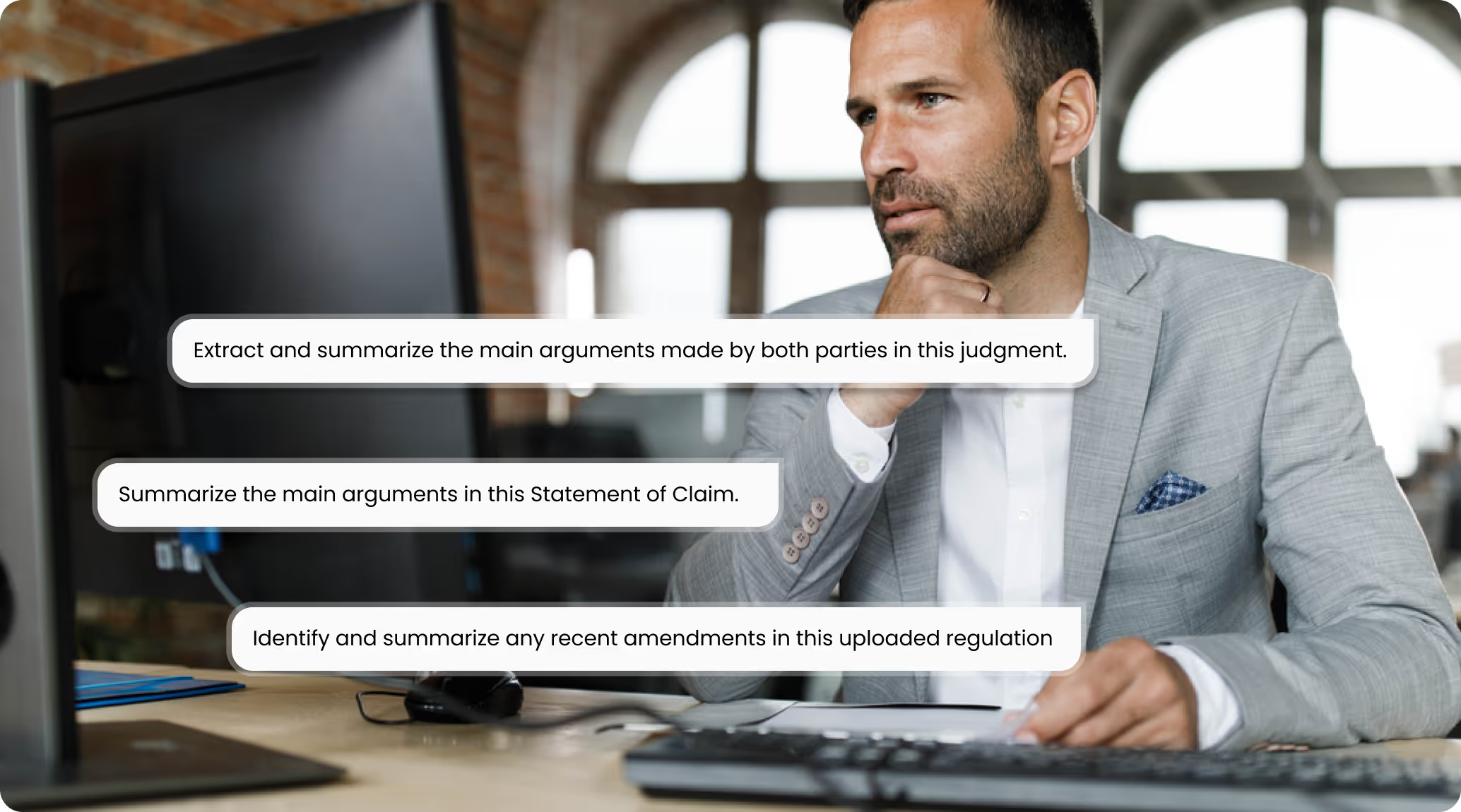

Depth and Precision of Output

- Beyond speed: is the AI delivering legally reliable, nuanced content?

- How does it handle edge cases, ambiguity, or conflicting authorities?

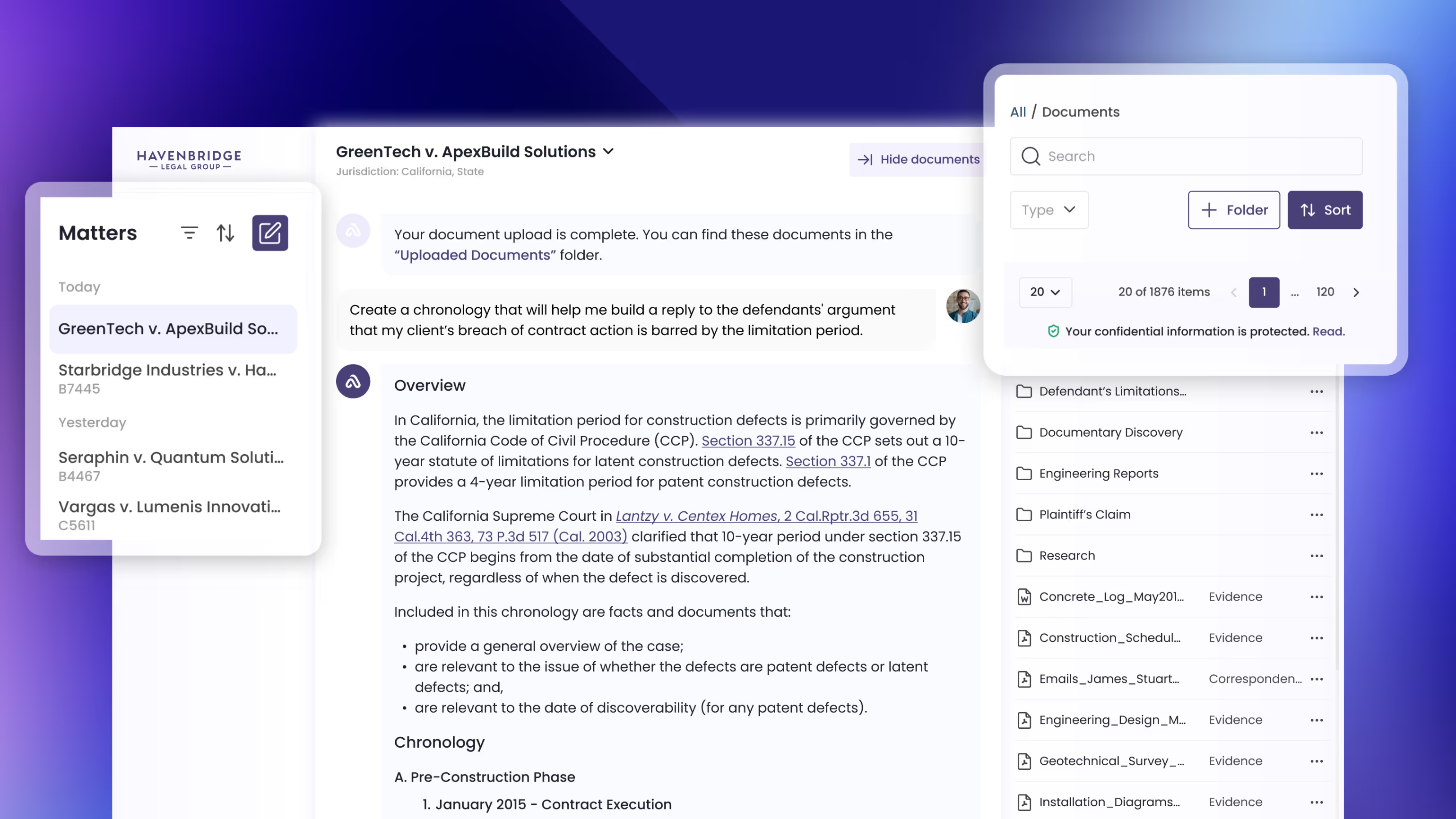

Control and Transparency

- Can your lawyers see and understand how the AI arrived at its outputs?

- Are citations traceable, and can responses be audited?

Training and Support

- How robust is the onboarding, documentation, and human support?

- Are you buying a tool, or a long-term partner in innovation?

A Strategic Framework for Comparison

When every tool promises efficiency, intelligence, and transformation, it becomes difficult to draw meaningful distinctions. What you need when evaluating and comparing different tools is a consistent, practical way to assess them against what truly matters.

Here’s a framework designed for that purpose, built around five areas that reveal not just what a tool does, but how well it fits your firm:

- Task Fit

Does the tool solve a real problem in your firm? Is it built for the kind of legal work your teams do, or is it a general solution looking for a use case? - Legal Integrity

Can you trust the output? Look beyond speed and surface-level summaries. Evaluate how well the tool handles nuance, applies legal reasoning, and surfaces sources you can stand behind. - Operational Integration

How does the tool fit into existing workflows? Can it be adopted without major disruption? Will it scale across teams, or does it require constant handholding? - Governance & Risk

What visibility do you have into the tool’s decision-making? Can outputs be audited, cited, and explained? How does it handle sensitive information—and how does that align with your professional obligations? - Vendor Maturity

Is the company behind the tool equipped to support enterprise-scale adoption? Look at their responsiveness, roadmap, and understanding of legal practice—not just their technical claims.

This kind of structured assessment helps firms cut through noise and focus on what enables real, firm-wide value.

Questions to Bring to Your Next Legal AI Debrief

Too often, post-pilot evaluation is left to individual users or small teams, with feedback collected in silos. This may capture user sentiment, but it doesn’t surface the strategic insights that firm leaders need to make sound decisions.

The real value comes from a discussion; conversations that assess the AI beyond its practical use, and looks at how it affects client service, professional risk, and firm culture.

To guide the conversation, here are some key questions that managing partners and other firm leaders should be asking together:

What did this pilot actually demonstrate – and what didn’t it test?

Clarify the scope and limitations so you're not over- or underestimating impact.

Where does this tool fit within our broader strategy?

Consider how it aligns with your firm’s goals around efficiency, quality, or differentiation.

What would it take to adopt this at scale, technically, culturally, and operationally?

Look beyond feasibility to readiness: are your systems, teams, and clients ready for this shift?

Does this tool enhance how we practice law or simply change how we work?

Focus on whether it strengthens core legal thinking and service delivery.

What risks does it introduce, and how would we manage them?

Discuss confidentiality, accuracy, and oversight with the same rigor you apply to legal advice.

These directional questions help leadership move from “Did people like it?” to “Is this a sound move for our firm?”.

Conclusion

Pilots are useful, but they’re not a substitute for thoughtful assessment, and they don’t guarantee progress. In a landscape crowded with emerging tools, the real differentiator isn’t who runs the most pilots. It’s who makes the most informed decisions afterward.

For managing partners, that means asking the right questions, applying a consistent framework, and grounding every decision in how the tool enhances the firm’s core strengths.

Legal AI isn’t a race. It’s a strategy. And like any good strategy, success lies in clarity, alignment, and execution.

.avif)

.avif)

.jpeg)

%2520(1).jpeg)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

-min%25252520(1).avif)